Natively Adaptive Interfaces: A new framework for AI accessibility

Learn how Google's NAI framework uses AI to make technology more adaptive, inclusive and helpful for everyone.

Reinforcement Learning

Reinforcement learning, self-supervised learning, agents

Learn how Google's NAI framework uses AI to make technology more adaptive, inclusive and helpful for everyone.

Google Cloud built an industry-first AI tool to help U.S. Ski and Snowboard athletes.

Learn more about Google’s new ad that will run during football’s Big Game on February 8.

When AlphaFold solved the protein-folding problem in 2020, it showed that artificial intelligence could crack one of biology’s deepest mysteries: how a string of amino acids folds itself into a working molecular machine. The team at Google DeepMind behind that Nobel Prize-winning platform then turn

In 1930, a young physicist named Carl D. Anderson was tasked by his mentor with measuring the energies of cosmic rays—particles arriving at high speed from outer space. Anderson built an improved version of a cloud chamber, a device that visually records the trajectories of particles. In 1932, he sa

At times, it can seem like efforts to regulate and rein in AI are everything, everywhere, all at once. China issued the first AI-specific regulations in 2021. The focus is squarely on providers and content governance, enforced through platform control and recordkeeping requirements. In Europe, the

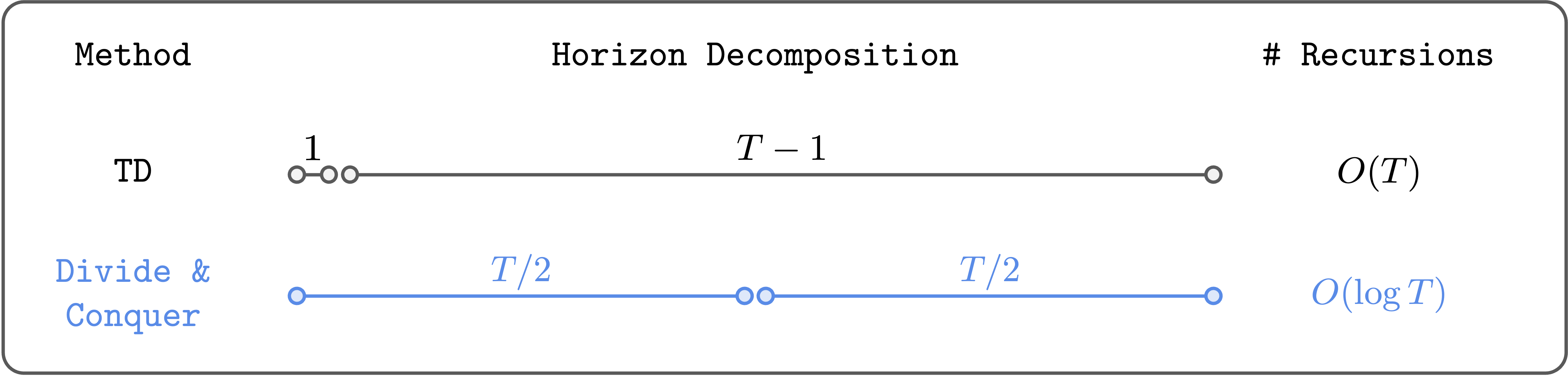

In this post, I’ll introduce a reinforcement learning (RL) algorithm based on an “alternative” paradigm: divide and conquer. Unlike traditional methods, this algorithm is not based on temporal difference (TD) learning (which has scalability challenges), and scales well to long-horizon tasks. We can

What exactly does word2vec learn, and how? Answering this question amounts to understanding representation learning in a minimal yet interesting language modeling task. Despite the fact that word2vec is a well-known precursor to modern language models, for many years, researchers lacked a quantitati

.modal { display: none; position: fixed; z-index: 9999; padding-top: 50px; left: 0; top: 0; width: 100%; height: 100%; overflow: auto; background-color: rgba(0,0,0,0.9); } .modal-content { margin: auto; display: block; max-width: 90%; max-height: 90%; } .close { posit

인공지능(AI) 보안 솔루션 전문기업 쿠도커뮤니케이션은 최근 사내 승진자를 대상으로 한 교육 프로그램을 통해 '성장 플로우 휠(Growth Flow Wheel)'을 '사람 중심 성장 문화'의 핵심 프레임으로 제시하며, 조직 경쟁력을 한층 고도화하고 있다고 8일 밝혔다.